#automated bullshit generator

Explore tagged Tumblr posts

Note

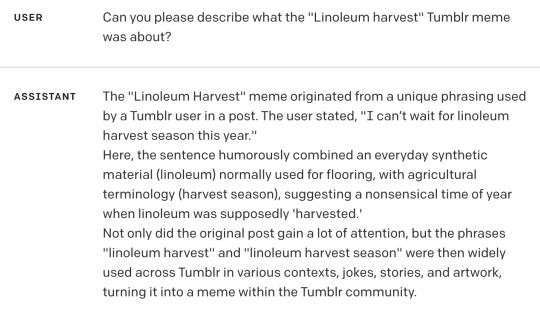

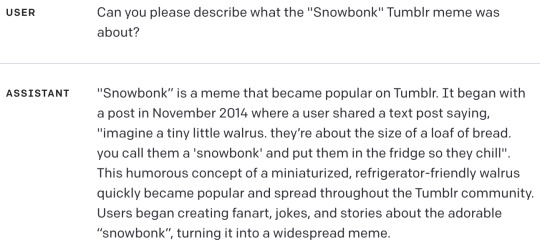

I discovered I can make chatgpt hallucinate tumblr memes:

This is hilarious and also I have just confirmed that GPT-4 does this too.

Bard even adds dates and user names and timelines, as well as typical usage suggestions. Its descriptions were boring and wordy so I will summarize with a timeline:

I think this one was my favorite:

Finding whatever you ask for, even if it doesn't exist, isn't ideal behavior for chatbots that people are using to retrieve and summarize information. It's like weaponized confirmation bias.

more at aiweirdness.com

#neural networks#chatbots#automated bullshit generator#fake tumblr meme#chatgpt#gpt4#bard#image a turtle with the power of butter#unstoppable

1K notes

·

View notes

Text

Modern AU equivalent of "bodysharing" scumcum where Shen Jiu is the Cang Qiong VP, director of the Qing Jing division, who fought tooth and nail to work his way up in the world despite a destitute childhood and criminal youth, and Shen Yuan is his useless rich boyfriend who spends all day reading light novels and getting into fights on the internet. (Hey, that's how they met! How romantic!)

One day, Shen Jiu has another qi deviation severe PTSD episode; what's different this time around is that, for once, Shen Yuan manages to convince him to take mental health time off work. He says he'll handle it, whatever that means! And Shen Jiu, for once, isn't suspicious, hypervigilant, distrustful enough to question it. He's having a really bad time, okay?!

But Shen Yuan didn't really think it through. What's he supposed to do, tell Yue Qi that Shen Jiu is out for a few days? Shen Jiu will kill him, and then Yue Qi will storm the preposterously expensive and exclusive mental health retreat Shen Yuan put Shen Jiu up in perfectly normal and reasonable outpatient facility, and then Shen Jiu will kill him again.

So, okay, he logs into Shen Jiu's work laptop (his passwords are all the same and so obvious, puh-lease) and he maybe starts just... pretending to be Shen Jiu. Just while he's away! Just to keep people from getting suspicious that Shen Jiu is gone!

And if he notices that Shen Jiu has been maybe... not handling some of his subordinates very well, then... Listen! There is someone who's on a really aggressive and punitive PIP that doesn't look like deserves it. (Let's remove that performance plan, and give him a merit bonus to make up for it... and let's put him on a better assignment, too, we're just making things right! Poor kid!) It also looks like a bunch of stuff in this division is being handled really poorly in general, actually. Figures, Jiu-ge is a brat at the best of times and really fucking mean and jealous at the worst. Maybe he'll have fewer qi deviations mental health crises if comes back this time to an environment that's not cultivating as much bitterness and negativity as possible, ah??

Meanwhile, at the most infuriatingly new-age uwu bullshit daycare for the richest of sad people, Shen Jiu sneaks away to where the cameras and automated surveillance systems (Hello, esteemed guest! This System must insist that you do not try sneaking out the marked emergency exit doors...!) to pull out the work phone he managed to smuggle in, and...

What the absolute fuck?! There is only on person who both knows his password (ugh) and knows his general writing style (ugh!!) who would be stealing his identity to meddle in his work!

The next several days are spent with Shen Yuan and Shen Jiu logging each other out in turns, and desperately trying to undo the damage the other has caused in their brief moments of control.

Yes, this does continue even after Shen Jiu returns from his mental health retreat. If nothing else, it keeps the Qing Jing division on its toes, and massively confuses one intern Luo Binghe. (The signals. are mixed.)

(Only Shang Qinghua knows what's up, but he's not saying jack shit because he is 100% committing identity fraud himself. “Shang Qinghua” is the name of a dead man with a good credit score, no debts, and no ties to the criminal underworld or warrants for his arrest, so, you know, “it's free real estate” or whatever. "He's dead! He's not using it anymore! It's fine!")

456 notes

·

View notes

Quote

AI systems like ChatGPT are trained with text from Twitter, Facebook, Reddit, and other huge archives of bullshit, alongside plenty of actual facts (including Wikipedia and text ripped off from professional writers). But there is no algorithm in ChatGPT to check which parts are true. The output is literally bullshit, exactly as defined by philosopher Harry Frankfurt, and as we would expect from Graeber’s study of bullshit jobs. Just as Twitter encourages bullshitting politicians who don’t care whether what they say is true or false, the archives of what they have said can be used to train automatic bullshit generators.

Oops! We Automated Bullshit.

343 notes

·

View notes

Text

Humans are not perfectly vigilant

I'm on tour with my new, nationally bestselling novel The Bezzle! Catch me in BOSTON with Randall "XKCD" Munroe (Apr 11), then PROVIDENCE (Apr 12), and beyond!

Here's a fun AI story: a security researcher noticed that large companies' AI-authored source-code repeatedly referenced a nonexistent library (an AI "hallucination"), so he created a (defanged) malicious library with that name and uploaded it, and thousands of developers automatically downloaded and incorporated it as they compiled the code:

https://www.theregister.com/2024/03/28/ai_bots_hallucinate_software_packages/

These "hallucinations" are a stubbornly persistent feature of large language models, because these models only give the illusion of understanding; in reality, they are just sophisticated forms of autocomplete, drawing on huge databases to make shrewd (but reliably fallible) guesses about which word comes next:

https://dl.acm.org/doi/10.1145/3442188.3445922

Guessing the next word without understanding the meaning of the resulting sentence makes unsupervised LLMs unsuitable for high-stakes tasks. The whole AI bubble is based on convincing investors that one or more of the following is true:

There are low-stakes, high-value tasks that will recoup the massive costs of AI training and operation;

There are high-stakes, high-value tasks that can be made cheaper by adding an AI to a human operator;

Adding more training data to an AI will make it stop hallucinating, so that it can take over high-stakes, high-value tasks without a "human in the loop."

These are dubious propositions. There's a universe of low-stakes, low-value tasks – political disinformation, spam, fraud, academic cheating, nonconsensual porn, dialog for video-game NPCs – but none of them seem likely to generate enough revenue for AI companies to justify the billions spent on models, nor the trillions in valuation attributed to AI companies:

https://locusmag.com/2023/12/commentary-cory-doctorow-what-kind-of-bubble-is-ai/

The proposition that increasing training data will decrease hallucinations is hotly contested among AI practitioners. I confess that I don't know enough about AI to evaluate opposing sides' claims, but even if you stipulate that adding lots of human-generated training data will make the software a better guesser, there's a serious problem. All those low-value, low-stakes applications are flooding the internet with botshit. After all, the one thing AI is unarguably very good at is producing bullshit at scale. As the web becomes an anaerobic lagoon for botshit, the quantum of human-generated "content" in any internet core sample is dwindling to homeopathic levels:

https://pluralistic.net/2024/03/14/inhuman-centipede/#enshittibottification

This means that adding another order of magnitude more training data to AI won't just add massive computational expense – the data will be many orders of magnitude more expensive to acquire, even without factoring in the additional liability arising from new legal theories about scraping:

https://pluralistic.net/2023/09/17/how-to-think-about-scraping/

That leaves us with "humans in the loop" – the idea that an AI's business model is selling software to businesses that will pair it with human operators who will closely scrutinize the code's guesses. There's a version of this that sounds plausible – the one in which the human operator is in charge, and the AI acts as an eternally vigilant "sanity check" on the human's activities.

For example, my car has a system that notices when I activate my blinker while there's another car in my blind-spot. I'm pretty consistent about checking my blind spot, but I'm also a fallible human and there've been a couple times where the alert saved me from making a potentially dangerous maneuver. As disciplined as I am, I'm also sometimes forgetful about turning off lights, or waking up in time for work, or remembering someone's phone number (or birthday). I like having an automated system that does the robotically perfect trick of never forgetting something important.

There's a name for this in automation circles: a "centaur." I'm the human head, and I've fused with a powerful robot body that supports me, doing things that humans are innately bad at.

That's the good kind of automation, and we all benefit from it. But it only takes a small twist to turn this good automation into a nightmare. I'm speaking here of the reverse-centaur: automation in which the computer is in charge, bossing a human around so it can get its job done. Think of Amazon warehouse workers, who wear haptic bracelets and are continuously observed by AI cameras as autonomous shelves shuttle in front of them and demand that they pick and pack items at a pace that destroys their bodies and drives them mad:

https://pluralistic.net/2022/04/17/revenge-of-the-chickenized-reverse-centaurs/

Automation centaurs are great: they relieve humans of drudgework and let them focus on the creative and satisfying parts of their jobs. That's how AI-assisted coding is pitched: rather than looking up tricky syntax and other tedious programming tasks, an AI "co-pilot" is billed as freeing up its human "pilot" to focus on the creative puzzle-solving that makes coding so satisfying.

But an hallucinating AI is a terrible co-pilot. It's just good enough to get the job done much of the time, but it also sneakily inserts booby-traps that are statistically guaranteed to look as plausible as the good code (that's what a next-word-guessing program does: guesses the statistically most likely word).

This turns AI-"assisted" coders into reverse centaurs. The AI can churn out code at superhuman speed, and you, the human in the loop, must maintain perfect vigilance and attention as you review that code, spotting the cleverly disguised hooks for malicious code that the AI can't be prevented from inserting into its code. As "Lena" writes, "code review [is] difficult relative to writing new code":

https://twitter.com/qntm/status/1773779967521780169

Why is that? "Passively reading someone else's code just doesn't engage my brain in the same way. It's harder to do properly":

https://twitter.com/qntm/status/1773780355708764665

There's a name for this phenomenon: "automation blindness." Humans are just not equipped for eternal vigilance. We get good at spotting patterns that occur frequently – so good that we miss the anomalies. That's why TSA agents are so good at spotting harmless shampoo bottles on X-rays, even as they miss nearly every gun and bomb that a red team smuggles through their checkpoints:

https://pluralistic.net/2023/08/23/automation-blindness/#humans-in-the-loop

"Lena"'s thread points out that this is as true for AI-assisted driving as it is for AI-assisted coding: "self-driving cars replace the experience of driving with the experience of being a driving instructor":

https://twitter.com/qntm/status/1773841546753831283

In other words, they turn you into a reverse-centaur. Whereas my blind-spot double-checking robot allows me to make maneuvers at human speed and points out the things I've missed, a "supervised" self-driving car makes maneuvers at a computer's frantic pace, and demands that its human supervisor tirelessly and perfectly assesses each of those maneuvers. No wonder Cruise's murderous "self-driving" taxis replaced each low-waged driver with 1.5 high-waged technical robot supervisors:

https://pluralistic.net/2024/01/11/robots-stole-my-jerb/#computer-says-no

AI radiology programs are said to be able to spot cancerous masses that human radiologists miss. A centaur-based AI-assisted radiology program would keep the same number of radiologists in the field, but they would get less done: every time they assessed an X-ray, the AI would give them a second opinion. If the human and the AI disagreed, the human would go back and re-assess the X-ray. We'd get better radiology, at a higher price (the price of the AI software, plus the additional hours the radiologist would work).

But back to making the AI bubble pay off: for AI to pay off, the human in the loop has to reduce the costs of the business buying an AI. No one who invests in an AI company believes that their returns will come from business customers to agree to increase their costs. The AI can't do your job, but the AI salesman can convince your boss to fire you and replace you with an AI anyway – that pitch is the most successful form of AI disinformation in the world.

An AI that "hallucinates" bad advice to fliers can't replace human customer service reps, but airlines are firing reps and replacing them with chatbots:

https://www.bbc.com/travel/article/20240222-air-canada-chatbot-misinformation-what-travellers-should-know

An AI that "hallucinates" bad legal advice to New Yorkers can't replace city services, but Mayor Adams still tells New Yorkers to get their legal advice from his chatbots:

https://arstechnica.com/ai/2024/03/nycs-government-chatbot-is-lying-about-city-laws-and-regulations/

The only reason bosses want to buy robots is to fire humans and lower their costs. That's why "AI art" is such a pisser. There are plenty of harmless ways to automate art production with software – everything from a "healing brush" in Photoshop to deepfake tools that let a video-editor alter the eye-lines of all the extras in a scene to shift the focus. A graphic novelist who models a room in The Sims and then moves the camera around to get traceable geometry for different angles is a centaur – they are genuinely offloading some finicky drudgework onto a robot that is perfectly attentive and vigilant.

But the pitch from "AI art" companies is "fire your graphic artists and replace them with botshit." They're pitching a world where the robots get to do all the creative stuff (badly) and humans have to work at robotic pace, with robotic vigilance, in order to catch the mistakes that the robots make at superhuman speed.

Reverse centaurism is brutal. That's not news: Charlie Chaplin documented the problems of reverse centaurs nearly 100 years ago:

https://en.wikipedia.org/wiki/Modern_Times_(film)

As ever, the problem with a gadget isn't what it does: it's who it does it for and who it does it to. There are plenty of benefits from being a centaur – lots of ways that automation can help workers. But the only path to AI profitability lies in reverse centaurs, automation that turns the human in the loop into the crumple-zone for a robot:

https://estsjournal.org/index.php/ests/article/view/260

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/04/01/human-in-the-loop/#monkey-in-the-middle

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

--

Jorge Royan (modified) https://commons.wikimedia.org/wiki/File:Munich_-_Two_boys_playing_in_a_park_-_7328.jpg

CC BY-SA 3.0 https://creativecommons.org/licenses/by-sa/3.0/deed.en

--

Noah Wulf (modified) https://commons.m.wikimedia.org/wiki/File:Thunderbirds_at_Attention_Next_to_Thunderbird_1_-_Aviation_Nation_2019.jpg

CC BY-SA 4.0 https://creativecommons.org/licenses/by-sa/4.0/deed.en

#pluralistic#ai#supervised ai#humans in the loop#coding assistance#ai art#fully automated luxury communism#labor

379 notes

·

View notes

Text

I’ve been rereading the late anthropologist David Graeber’s Bullshit Jobs, which persuasively makes the case that the corporate world is happy to nurture inefficient or wasteful jobs if they somehow serve the managerial class or flatter elites—while encouraging the public to harbor animosity at those who do rewarding work or work that clearly benefits society. I think we can expect AI to accelerate this phenomenon, and to help generate echelons of new dubious jobs—prompt engineers, product marketers, etc—as it erodes conditions for artists and public servants.

A common refrain about modern AI is that it was supposed to automate the dull jobs so we could all be more creative, but instead, it’s being used to automate the creative jobs. That’s a pretty good articulation of what lies at the heart of the AI jobs crisis. Take the former Duolingo worker who was laid off as part of the company’s pivot to AI.

“So much will be lost,” the writer told me. “I was a content writer, I wrote the questions that learners see in the lessons. I enjoyed being able be creative. We were encouraged to make the exercises fun.” Now, consider what it’s being replace with, per the worker:

“First, the AI output is very boring. And Duolingo was always known for being fun and quirky. Second, it absolutely makes mistakes. Even on things that you would think it could get right. The AI tools that are available for people who pay for Duolingo Max often get things wrong—they have an ‘explain my mistake’ tool that often will suggest something that’s incorrect, sometimes the robot voices are programmed to speak the wrong language.”

This is just a snapshot, too. This is happening, to varying degrees, to artists, journalists, writers, designers, coders—and soon, perhaps already, as Thompson’s story points out, it could be happening to even more jobs and lines of work.

Now, it needs to be underlined once again that generative AI is not yet the one-size-fits-all agent of job replacement its salesmen would like it to be—far from it. A recent SalesForce survey reported on by the Information show that only one-fifth of enterprise AI buyers are seeing good results, and that 61% of respondents report a disappointing return on investment for AI or even none at all.

Generative AI is still best at select tasks that do not require consistent reliability—hence its purveyors taking aim at art and creative industries. But all that’s secondary. The rise of generative AI, linked as it is with the ascent to power of the American tech oligarchy, has given rise to a jobs crisis nonetheless.

We’re left at a crossroads where we must consider nothing less than what kind of jobs we want people to be able to do, what kind of work and which institutions we think are important as a society, and what we’re willing to do to protect them—before the logic of generative AI and the jobs crisis it has begotten guts them to the bone, or devours them altogether.

62 notes

·

View notes

Text

The Brutalist’s most intriguing and controversial technical feature points forward rather than back: in January, the film’s editor Dávid Jancsó revealed that he and Corbet used tools from AI speech software company Respeecher to make the Hungarian-language dialogue spoken by Adrien Brody (who plays the protagonist, Hungarian émigré architect László Tóth) and Felicity Jones (who plays Tóth’s wife Erzsébet) sound more Hungarian. In response to the ensuing backlash, Corbet clarified that the actors worked “for months” with a dialect coach to perfect their accents; AI was used “in Hungarian language dialogue editing only, specifically to refine certain vowels and letters for accuracy.” In this way, Corbet seemed to suggest, the production’s two central performances were protected against the howls of outrage that would have erupted from the world’s 14 million native Hungarian speakers had The Brutalist made it to screens with Brody and Jones playing linguistically unconvincing Magyars. Far from offending the idea of originality and authorship in performance, AI in fact saved Brody and Jones from committing crimes against the Uralic language family; I shudder even to imagine how comically inept their performances might have been without this technological assist, a catastrophe of fumbled agglutinations, misplaced geminates, and amateur-hour syllable stresses that would have no doubt robbed The Brutalist of much of its awards season élan. This all seems a little silly, not to say hypocritical. Defenders of this slimy deception claim the use of AI in film is no different than CGI or automated dialogue replacement, tools commonly deployed in the editing suite for picture and audio enhancement. But CGI and ADR don’t tamper with the substance of a performance, which is what’s at issue here. Few of us will have any appreciation for the corrected accents in The Brutalist: as is the case, I imagine, for most of the people who’ve seen the film, I don’t speak Hungarian. But I do speak bullshit, and that’s what this feels like. This is not to argue that synthetic co-pilots and assistants of the type that have proliferated in recent years hold no utility at all. Beyond the creative sector, AI’s potential and applications are limitless, and the technology seems poised to unleash a bold new era of growth and optimization. AI will enable smoother reductions in headcount by giving managers more granular data on the output and sentiment of unproductive workers; it will allow loan sharks and crypto scammers to get better at customer service; it will offer health insurance companies the flexibility to more meaningfully tie premiums to diet, lifestyle, and sociability, creating billions in savings; it will help surveillance and private security solution providers improve their expertise in facial recognition and gait analysis; it will power a revolution in effective “pre-targeting” for the Big Pharma, buy-now-pay-later, and drone industries. Within just a few years advances like these will unlock massive productivity gains that we’ll all be able to enjoy in hell, since the energy-hungry data centers on which generative AI relies will have fried the planet and humanity will be extinct.

3 March 2025

37 notes

·

View notes

Text

I saw a post the other day calling criticism of generative AI a moral panic, and while I do think many proprietary AI technologies are being used in deeply unethical ways, I think there is a substantial body of reporting and research on the real-world impacts of the AI boom that would trouble the comparison to a moral panic: while there *are* older cultural fears tied to negative reactions to the perceived newness of AI, many of those warnings are Luddite with a capital L - that is, they're part of a tradition of materialist critique focused on the way the technology is being deployed in the political economy. So (1) starting with the acknowledgement that a variety of machine-learning technologies were being used by researchers before the current "AI" hype cycle, and that there's evidence for the benefit of targeted use of AI techs in settings where they can be used by trained readers - say, spotting patterns in radiology scans - and (2) setting aside the fact that current proprietary LLMs in particular are largely bullshit machines, in that they confidently generate errors, incorrect citations, and falsehoods in ways humans may be less likely to detect than conventional disinformation, and (3) setting aside as well the potential impact of frequent offloading on human cognition and of widespread AI slop on our understanding of human creativity...

What are some of the material effects of the "AI" boom?

Guzzling water and electricity

The data centers needed to support AI technologies require large quantities of water to cool the processors. A to-be-released paper from the University of California Riverside and the University of Texas Arlington finds, for example, that "ChatGPT needs to 'drink' [the equivalent of] a 500 ml bottle of water for a simple conversation of roughly 20-50 questions and answers." Many of these data centers pull water from already water-stressed areas, and the processing needs of big tech companies are expanding rapidly. Microsoft alone increased its water consumption from 4,196,461 cubic meters in 2020 to 7,843,744 cubic meters in 2023. AI applications are also 100 to 1,000 times more computationally intensive than regular search functions, and as a result the electricity needs of data centers are overwhelming local power grids, and many tech giants are abandoning or delaying their plans to become carbon neutral. Google’s greenhouse gas emissions alone have increased at least 48% since 2019. And a recent analysis from The Guardian suggests the actual AI-related increase in resource use by big tech companies may be up to 662%, or 7.62 times, higher than they've officially reported.

Exploiting labor to create its datasets

Like so many other forms of "automation," generative AI technologies actually require loads of human labor to do things like tag millions of images to train computer vision for ImageNet and to filter the texts used to train LLMs to make them less racist, sexist, and homophobic. This work is deeply casualized, underpaid, and often psychologically harmful. It profits from and re-entrenches a stratified global labor market: many of the data workers used to maintain training sets are from the Global South, and one of the platforms used to buy their work is literally called the Mechanical Turk, owned by Amazon.

From an open letter written by content moderators and AI workers in Kenya to Biden: "US Big Tech companies are systemically abusing and exploiting African workers. In Kenya, these US companies are undermining the local labor laws, the country’s justice system and violating international labor standards. Our working conditions amount to modern day slavery."

Deskilling labor and demoralizing workers

The companies, hospitals, production studios, and academic institutions that have signed contracts with providers of proprietary AI have used those technologies to erode labor protections and worsen working conditions for their employees. Even when AI is not used directly to replace human workers, it is deployed as a tool for disciplining labor by deskilling the work humans perform: in other words, employers use AI tech to reduce the value of human labor (labor like grading student papers, providing customer service, consulting with patients, etc.) in order to enable the automation of previously skilled tasks. Deskilling makes it easier for companies and institutions to casualize and gigify what were previously more secure positions. It reduces pay and bargaining power for workers, forcing them into new gigs as adjuncts for its own technologies.

I can't say anything better than Tressie McMillan Cottom, so let me quote her recent piece at length: "A.I. may be a mid technology with limited use cases to justify its financial and environmental costs. But it is a stellar tool for demoralizing workers who can, in the blink of a digital eye, be categorized as waste. Whatever A.I. has the potential to become, in this political environment it is most powerful when it is aimed at demoralizing workers. This sort of mid tech would, in a perfect world, go the way of classroom TVs and MOOCs. It would find its niche, mildly reshape the way white-collar workers work and Americans would mostly forget about its promise to transform our lives. But we now live in a world where political might makes right. DOGE’s monthslong infomercial for A.I. reveals the difference that power can make to a mid technology. It does not have to be transformative to change how we live and work. In the wrong hands, mid tech is an antilabor hammer."

Enclosing knowledge production and destroying open access

OpenAI started as a non-profit, but it has now become one of the most aggressive for-profit companies in Silicon Valley. Alongside the new proprietary AIs developed by Google, Microsoft, Amazon, Meta, X, etc., OpenAI is extracting personal data and scraping copyrighted works to amass the data it needs to train their bots - even offering one-time payouts to authors to buy the rights to frack their work for AI grist - and then (or so they tell investors) they plan to sell the products back at a profit. As many critics have pointed out, proprietary AI thus works on a model of political economy similar to the 15th-19th-century capitalist project of enclosing what was formerly "the commons," or public land, to turn it into private property for the bourgeois class, who then owned the means of agricultural and industrial production. "Open"AI is built on and requires access to collective knowledge and public archives to run, but its promise to investors (the one they use to attract capital) is that it will enclose the profits generated from that knowledge for private gain.

AI companies hungry for good data to train their Large Language Models (LLMs) have also unleashed a new wave of bots that are stretching the digital infrastructure of open-access sites like Wikipedia, Project Gutenberg, and Internet Archive past capacity. As Eric Hellman writes in a recent blog post, these bots "use as many connections as you have room for. If you add capacity, they just ramp up their requests." In the process of scraping the intellectual commons, they're also trampling and trashing its benefits for truly public use.

Enriching tech oligarchs and fueling military imperialism

The names of many of the people and groups who get richer by generating speculative buzz for generative AI - Elon Musk, Mark Zuckerberg, Sam Altman, Larry Ellison - are familiar to the public because those people are currently using their wealth to purchase political influence and to win access to public resources. And it's looking increasingly likely that this political interference is motivated by the probability that the AI hype is a bubble - that the tech can never be made profitable or useful - and that tech oligarchs are hoping to keep it afloat as a speculation scheme through an infusion of public money - a.k.a. an AIG-style bailout.

In the meantime, these companies have found a growing interest from military buyers for their tech, as AI becomes a new front for "national security" imperialist growth wars. From an email written by Microsoft employee Ibtihal Aboussad, who interrupted Microsoft AI CEO Mustafa Suleyman at a live event to call him a war profiteer: "When I moved to AI Platform, I was excited to contribute to cutting-edge AI technology and its applications for the good of humanity: accessibility products, translation services, and tools to 'empower every human and organization to achieve more.' I was not informed that Microsoft would sell my work to the Israeli military and government, with the purpose of spying on and murdering journalists, doctors, aid workers, and entire civilian families. If I knew my work on transcription scenarios would help spy on and transcribe phone calls to better target Palestinians, I would not have joined this organization and contributed to genocide. I did not sign up to write code that violates human rights."

So there's a brief, non-exhaustive digest of some vectors for a critique of proprietary AI's role in the political economy. tl;dr: the first questions of material analysis are "who labors?" and "who profits/to whom does the value of that labor accrue?"

For further (and longer) reading, check out Justin Joque's Revolutionary Mathematics: Artificial Intelligence, Statistics and the Logic of Capitalism and Karen Hao's forthcoming Empire of AI.

25 notes

·

View notes

Text

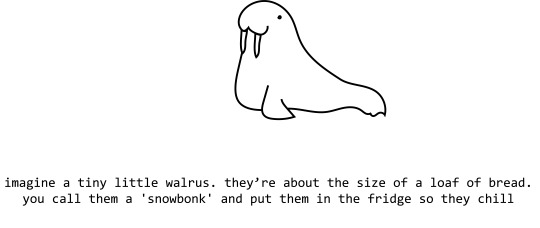

If you just wandered in here for some reason, I've been rambling about this for a while. The short version, though: I forgot to swap people around while I was setting up my initial colonists, so I accidentally started the game with a randomly-generated 13-year-old with almost no skills. She almost immediately picked up an ancient beer from the ground and chugged it, so needless to say, she immediately endeared herself to me.

Since this whole thing started happened by accident, I never documented the basic situation here, so might as well start with that.

Meet Yoshiko "Happy" Russell. She started as a solo mechanitor, which means that she installed a chip in her brain that allows her to control robots mechanoids, got discriminated against as a result, and decided to flee to the edge of known space to live by herself.

As if that wasn't bad enough, this is the backstory the game gave her:

Thanks to this, the game often displays her name as 'Happy, Pushover.'

She isn't good at anything except research. The only other thing she's competent at is shooting. She's not a horrible artist, but she's not good, either. I think she's only managed a single work with a quality above Poor.

She's also now 17 years old, because Rimworld accelerates aging for anyone under 20 to get them to adulthood faster. Going from 13 - 18 takes 2 actual years.

Also, if you are familiar with how Rimworld handles ages, you will notice that she's 3433 chronological years old (i.e. she was in cryosleep for millennia), which has to be one of the highest that I've seen. It's also confusing, because it's now the year 5501, which means that she was born in 2068. According to the fiction primer, humanity started spreading out from Earth around 2100. So this kid was, like, the first person off the planet. I'm gonna say that relativity bullshit is to blame.

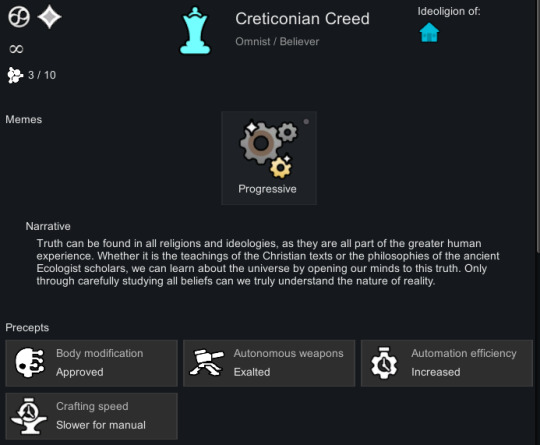

She follows the Creticonian Creed, which came from the game's 'randomly generate a lightweight ideoligion and develop it through play' option. I added a couple of precepts to it before starting, and the result can basically be summarized as "it is a moral imperative to automate as much work as possible so I can spend more time on Space Reddit." This is a philosophy that makes her constantly a little bit happier because she has automated turrets outside her front door. The randomly-generated title for the leader is 'Great Great Automancer,' and they are entitled to wear a beret. Which is all to say that it sounds exactly like something that a 13-year-old who's too smart for her own good would come up with. I swear that apart from the tenets, I didn't touch any of this.

373 notes

·

View notes

Note

just saw your patreon tax post, but what is bad about kofi membership? I got confuse after seeing comments arguining about which platform is better, and you also talked about kofi a bit...

I wish I can make a patreon but I got nothing but my writings, which barely get any attention

[context]

Okay I should've phrased it correctly, it's less about which platform is better than the other, but rather which platform is more suited for you. I know you're more on writing, but I'll offer a general view since I've used both sites + others has asked me about this. Disclaimer, these are all from my personal experience, it can be different to the rest.

What both of them can do:

Offer tier membership, you can offer free or paid and list down the benefits of joining your membership, you can also limit how many members you want.

Basic build in functions such as posting photo and poll.

Digital and physical benefits.

Analytics and tracking management.

Discord integration.

Financial payouts such as PayPal, Stripe or Cards.

Ko-fi membership

The Good

Generalized service for smaller scale projects, such as a tipping site and paywalled subscription service for fewer fees than patreon (ko-fi takes 5%).

Good for beginners because there are a lot of things that can be performed with a simple button, with comprehensive FAQ lists. Extremely straightforward with zero hastle.

Payment goes directly into your digital (PayPal/Stripe) or physical (card) wallet, no waiting for payout days.

The Bad

No NSFW content, if you try to go around this rule high chance to get penalized and your account goes bye bye. I've seen artists who offer nsfw art commissions in a hush hush way, but guess what? Ko-fi monitors your dms apparently, so if you sent nsfw related content through DMs or Posts, you're very likely going to get banned (happened to a friend).

There's no mobile app, might be a hastle if you want to post and handle things, every notification or changes you'll have to do it on web or desktop/laptop.

No direct video uploads, you'll have to use a link (a disadvantage if you're offering timelapse videos or podcasts)

For discord integration, it's finicky, half the time it doesn't work. This is due to pledger needing to connect their discord to their profile first before joining yours.

Sales tax are not automated. I may be incorrect here so better refer the FAQ, but from what I am understanding is that kofi doesn't automatically calculate, collect and remit sales/VAT/GST tax based on the buyer's location, content sold and local tax laws. You need to manually add them yourself. If you're charging $10, the buyer pays exactly $10, you receive <$10. If your country requires you to collect VAT or other sales tax, you're responsible for calculating how much should go to taxes, reporting and possibly remitting that amount manually or issuing any tax-compliant invoices if required. Uhhh so far I have not seen any cases regarding taxes, so I'm basing this off their FAQ page, posts online and youtube.

No group dms, so if you're someone who prefers to have more engagements with your supporters such as wanting to update things through chats, asking feedbacks, or simply just vibing with them, it might feel limited on Ko-fi. This is why most people have a separate discord group for better handling and posting in general.

Limited management. There are lesser details on post views, membership management, tracking payment or managing your posts. Every post you share in Ko-fi goes directly in your gallery which, in my opinion, kinda sucks because it's cluttery and messy. You will just have a tab of payment, like who joined, who has outstanding payment and who left.

Patreon

The Good

Bigger popularity and attention since it has been a thing for years. Also famously known as "pay so you can see art of dicks, tits and balls" site. Although throughout the years they've been trying to suppress NSFW posting for some reason (it is still allowed, just with some bullshit rules to deal with).

Mobile apps available, simple to use and comfortable to handle everything anywhere any time.

You can upload videos directly. You can also do polls, audio, link and livestreams.

Detailed analytics. Posts impressions/views, membership earnings, surveys (what your patreon members are joining your membership for), traffic (total visit of your patreon page and where they're coming from). This is especially useful when you have a massive following and want to have insights and control over your performance (or if you just wanna be nosy like me KAJSDKF).

Buttload of other functions that I myself have not learn much, such as automated promotions, discounts and product selling.

Built-in Discord integration. Uhm idk if Patreon works better than Ko-fi cuz when I transition to Patreon I didnt set up a discord group like before (I don't have the commitment to run and maintain them)

(Again as previously mentioned, fact check on their official page instead because I'm not sure about this) Sales tax automatically calculated and added. Patreon automatically adds VAT or applicable taxes on top of what supporter pays. If you're charging $5, a supporter from EU is charged $5.60, you get $5 in patreon and 0.60 goes to tax authorities, final money you're getting is <5$. They also handle tax collection, filling and remitting to country and provide tax reports for your records.

There are group dms (called Community tab). You can update processes and notify them directly there. It may not be as versatile as your regular communication apps like discord (eg, you can only send one image at a time to the group) but it's enough to keep some form of engagement. There is also Moderation hub to assign moderators or reported content stuff (I personally have not use this thing).

The Bad

Personally, starting patreon page was daunting to me cuz *points at FAQ and setup pages* THAT'S A LOT OF WORDS. It is not as straightforward as Ko-fi, so if you're struggling with management or suck at English like me, it can be terrifying to do the first few steps, it is a bit more advance. However, since Patreon is widely use you can ask people that has em and they'll be happy to help out, not to mention videos and tutorials out there that simplify the process. (Thank you Bressy and Shiba for helping out my initial phases)

The random ban for no reason, I've seen people who got their accounts banned when they created their patreon page, idk the full story as to why or how but, yeah it happens. Customer service varies between countries, some good some bad.

Higher charging fees, patreon takes 8% instead of 5%, if you're on premium plan then they'll take 12%. They are however, changing this and just charge 10% for ALL new blog that starts after August 4th. If you have a blog before August 4th, you'll be the 8% group still. Payment processing fees varies by region and method, typically is 2.9% + $0.40. These fees could add up quickly and offer losses over gain if you're running small tiers or have a large fan base. I also heard generated tax bookkeeping files are slightly confusing to count if you're not familiar with these business stuff.

Payout delays. Initial funs will take 5-10 days (or longer if you're unlucky) after the first pledge (like when you get a first patreon). They stay in patreon and you'll either set up automatic payout or manually withdraw them, compare to Ko-fi's instant withdrawals.

I don't know much about this but I think the Shops option differ to Ko-fi and I've heard people saying Patreon Shop setup is more finicky, so yeah that.

okay that's a lot of words so which one should I choose????

If you want simplicity, something on the side just to earn some extra income, with no thoughts about expanding it beyond a comfortable numbers of supporters (such as 10-12 members), then Ko-Fi is great. There are many memberships out there who offer benefits besides art! Such as writing, photography, editing, even algebra classes. It just really comes down to what you can offer with your skills and capabilities.

If you want to build a community, you want to continue expanding to a certain goal, you want more control over financial management, systems and tools, then Patreon is the way to go. This is especially preferred if you have content-driven mindset and if you have audiences to reach.

The reason why I am suggesting to make your patreon post now is obviously the tax increment stuff, but also if you already have that thought to have a page in the future. I understand the concern of "but i'm not ready" or "i ain't got stuff to offer", but you can always have a blank state with free memberships only. If you truly want to offer something, I believe a few passage of your current wip about your fics, or if you're an artist a simple doodle every month should probably keep your page afloat (and I mean you can ask your friends to join for free and do the engagement sillays like liking and commenting if you want extra security). Idk if patreon allows blank pages though so uhm, don't say I didn't warn you if something did happen lmao xD

I read other comments that talked abou 10% is not that high, which is fair since everyone has a different financial status and background. I'm not out here forcing anyone to start a patreon right away, I am just simply suggesting.

I wish I can make a patreon but I got nothing but my writings, which barely get any attention

I'm going to be very blunt about this and you might dislike what I am going to say, but if you have a goal you'll have to put in that effort to research, learn, experience the loss and failure, fall hard and step up again. I too, was once an account with 1 follower, and it's from Tumblr official page. My doodles were not the greatest, it's not rendered God-like, nor does it have any coherency. While I didn't think about starting memberships very early on, once I had that thought I started pushing myself to be better. Being a creative-based person online can be challenging, because if you want some financial gain from this, you'll have to put in the work to earn it. Studying what algorithm wants, what the audiences like, how other users alike are utilizing their skills to pull in the people. It's a long process that involves a lot of trial and errors, and it gets incredibly frustrating and tiring with a lot of burntout and giving up. Because you usually have to do something that you yourself may not like, but the vast majority do. You have 2 options with this kind of dilemma, stay true to yourself and do whatever you want, create and write what you want to be authentic, and find the real ones who would support you along the way, this one will be a longer road and lesser moments of "shit man, why the hell do i still write alien fucking if it throws me off." The other is okay, you figure out the people want, so time to improve and start working on that thing and slowly gain a steady amount of followers, and when you finally feel like it? start creating things you actually prefer. If they leave, they leave, if they stay, then they stay, you win some you lose some. I personally chose the second path, and yes I've lost a lot of people and support along the way, but I am happy with my progress. It took me about a year + before I launched ko-fi membership for 6 months, and then transition to Patreon now if you want a picture of my growth.

I don't think I'm there yet, even with my followings, even with patreon set up. There are many times where I still felt like what I do is inadequate and I'm ripping off their wallet with my creations and benefits. That is why I am still working my darn hardest to improve and to keep moving forward, but also to stop and reflect on my progress, to understand that these people are supporting me because they wanted to and they've been extremely kind and supportive along the way.

Well what I'm trying to say is, every foundation is hard to build, every beginning feels like torture, because it's foreign and it's tiring to even think about it, but if you want, or in other cases, need this growth and expansion, you'll have to endure it. Just like learning how to ride a bike, ya gonna fall and ya gonna bleed at times, but once you're comfortable with the handles, the rest of the ride will be easier, not smooth sailing, just easier! because there are still some bumpy road and blockage ahead, it's an ever learning curve.

Since I come from art concept, I cannot offer much advice with writings. The best way for me to improve is by looking up at what others are doing, so a good start is always connecting with others. Do you like an author's work? then read up about them like how you're putting that blorbo under a microscope and analyze the shit outta them. How do they post? What words do they use often? What prompts or premise is usually popular with their posts? What time do they post? How do they communicate with others? How do they communicate to you? How do they tag their post to reach 2k notes? How do they respond to questions about their memberships if they have one? What benefits are they offering in their pages? etc etc

idk maybe this all sounds way too much work to earn 10 pledgers in patreon, maybe all these are just way too elaborated but, at the end of the day it really depends what you want, how much are you willing to achieve the goal and how comfortable you are with these online content monthly producing responsibilities, stuff. Yeah.

Either way, I wish you all the best in your endeavors!

13 notes

·

View notes

Text

Worldbuild Differently: Unthink Money

This week I want to talk a bit about one thing I see in both fantasy and scifi worldbuilding: Certain things about our world that we live in right now are assumed to be natural, and hence just adapted in the fantasy world. With just one tiny problem: They are not natural, and there were more than enough societies historically that avoided those pitfalls.

Another interesting thing were you can see writers struggling to unthink our world is money. No matter what fantasy world we are talking: We almost always see people paying with money, earning money, selling for money. Money, money, money. Everything is all about the money.

Only that until fairly recently money did actually not play such a big role in human history.

I know, I know. "But there were coins found as far back as..." Yes, they were. But we know that even in cultures that had coin, a lot of interactions happened without coins being exchanged. Yes, coins and some form of money often made it easier to trade if you had certain kinds of jobs, but for the most part a lot of people just exchanged stuff. And mind you: Not with bartering. (Read David Graeber's book in "Dept".) They just exchanged stuff and that was the normal way to go about those things.

Unless you have this kinda global capitalism system we have right now... Actually you do not really need money. There are several ways of making worlds work without money.

If you write fantasy set in a medieval setting, your characters might only interact with money if they are nobility (if you wanna keep it realistic). And if you write some sort of utopian science fiction (something like Solarpunk), there is also a good chance that folks just have gotten rid of money.

I am not saying: "Generally, write moneyless worlds." I am more saying: Think about this. Does money make sense in your world? Does it make things easier? And again: Is it logical to be there?

And you will allow me to bring up the maybe most clear example of a world where money does not make sense: Harry Potter. Rowling is not only a TERF, she is also utterly unable to imagine a world that is not run on Thatcher bullshit, no matter how little sense it makes within that world.

See, money in general exists to a good degree because when you exchange goods you are not just paying for the good but also for the work. And in some cases you just need to pay for work as it is. But... Well, in a world where most work actually does get automated with a simple spell... this does make so little sense. Food production is so much more efficient in this world - and most wizards to live in areas with a lot of land around where they can actually harvest their food.

And then there is the fact that quite a lot of stuff can simply be duplicated and changed around with simple spells. Which makes me wonder: What is money in this world even for? Why would this society run on money? Why would this world - that clearly does not really have a working class (aka people artificially kept poor to have workers) - need to keep people poor?

It just does not make any sense. And it is one of the points were one really notices how the worldbuilding does not really work.

Now, mind you: A lot of authors struggle with this. It is not just Rowling. We see the issues in so many other novels and worlds. I would argue that the way how heavily Faerûn relies on coin money also does not make a whole lot of sense, either, though at the very least that world has a bit of the excuse that the money kinda needs to be there for game reasons. But... there is a reason why I ignore it at times especially when writing about the DnD:HAT crew. (I mean, my Tav kinda relies on money, due to being a service worker.)

We tend to just recreate stuff that is normal to us in the worlds we build... But I am actually gonna argue, that a lot of worlds would be a lot more interesting, when we did not do that.

#worldbuilding#fantasy#fantasy worldbuilding#science fiction#scifi#solarpunk#scifi worldbuilding#harry potter#meta#analysis#faerun#forgotten realms

41 notes

·

View notes

Note

thank you for your work – signed, a human copywriter who lost 2 clients because they literally told me "why pay you when we can use chatgpt for free lol"

ugh, so sorry that happened to you

so many companies seem to be treating cheap imitation humans as adequate - like settling for red-flavored corn syrup instead of strawberry jam

#automated bullshit generator#the surface appearance of information without actually being usable as such

309 notes

·

View notes

Text

Elon Musk’s so-called Department of Government Efficiency (DOGE) operates on a core underlying assumption: The United States should be run like a startup. So far, that has mostly meant chaotic firings and an eagerness to steamroll regulations. But no pitch deck in 2025 is complete without an overdose of artificial intelligence, and DOGE is no different.

AI itself doesn’t reflexively deserve pitchforks. It has genuine uses and can create genuine efficiencies. It is not inherently untoward to introduce AI into a workflow, especially if you’re aware of and able to manage around its limitations. It’s not clear, though, that DOGE has embraced any of that nuance. If you have a hammer, everything looks like a nail; if you have the most access to the most sensitive data in the country, everything looks like an input.

Wherever DOGE has gone, AI has been in tow. Given the opacity of the organization, a lot remains unknown about how exactly it’s being used and where. But two revelations this week show just how extensive—and potentially misguided—DOGE’s AI aspirations are.

At the Department of Housing and Urban Development, a college undergrad has been tasked with using AI to find where HUD regulations may go beyond the strictest interpretation of underlying laws. (Agencies have traditionally had broad interpretive authority when legislation is vague, although the Supreme Court recently shifted that power to the judicial branch.) This is a task that actually makes some sense for AI, which can synthesize information from large documents far faster than a human could. There’s some risk of hallucination—more specifically, of the model spitting out citations that do not in fact exist—but a human needs to approve these recommendations regardless. This is, on one level, what generative AI is actually pretty good at right now: doing tedious work in a systematic way.

There’s something pernicious, though, in asking an AI model to help dismantle the administrative state. (Beyond the fact of it; your mileage will vary there depending on whether you think low-income housing is a societal good or you’re more of a Not in Any Backyard type.) AI doesn’t actually “know” anything about regulations or whether or not they comport with the strictest possible reading of statutes, something that even highly experienced lawyers will disagree on. It needs to be fed a prompt detailing what to look for, which means you can not only work the refs but write the rulebook for them. It is also exceptionally eager to please, to the point that it will confidently make stuff up rather than decline to respond.

If nothing else, it’s the shortest path to a maximalist gutting of a major agency’s authority, with the chance of scattered bullshit thrown in for good measure.

At least it’s an understandable use case. The same can’t be said for another AI effort associated with DOGE. As WIRED reported Friday, an early DOGE recruiter is once again looking for engineers, this time to “design benchmarks and deploy AI agents across live workflows in federal agencies.” His aim is to eliminate tens of thousands of government positions, replacing them with agentic AI and “freeing up” workers for ostensibly “higher impact” duties.

Here the issue is more clear-cut, even if you think the government should by and large be operated by robots. AI agents are still in the early stages; they’re not nearly cut out for this. They may not ever be. It’s like asking a toddler to operate heavy machinery.

DOGE didn’t introduce AI to the US government. In some cases, it has accelerated or revived AI programs that predate it. The General Services Administration had already been working on an internal chatbot for months; DOGE just put the deployment timeline on ludicrous speed. The Defense Department designed software to help automate reductions-in-force decades ago; DOGE engineers have updated AutoRIF for their own ends. (The Social Security Administration has recently introduced a pre-DOGE chatbot as well, which is worth a mention here if only to refer you to the regrettable training video.)

Even those preexisting projects, though, speak to the concerns around DOGE’s use of AI. The problem isn’t artificial intelligence in and of itself. It’s the full-throttle deployment in contexts where mistakes can have devastating consequences. It’s the lack of clarity around what data is being fed where and with what safeguards.

AI is neither a bogeyman nor a panacea. It’s good at some things and bad at others. But DOGE is using it as an imperfect means to destructive ends. It’s prompting its way toward a hollowed-out US government, essential functions of which will almost inevitably have to be assumed by—surprise!—connected Silicon Valley contractors.

12 notes

·

View notes

Text

the thrum of the machine sounds like a heart

The seconds creep like minutes, and the minutes like hours. Move, aim, fire, plod across this field until suddenly the entrance is there, a hair's breadth away. Until he’s standing outside of it, frozen in place. It’s the adrenaline, he thinks, grateful it’s detached him just enough from anger to be rational. Anger makes you stupid. He couldn’t afford that right now.

July doesn’t bother to ruminate – he just steadies his aim and fires, knocking another mech clinging to Baccara away.

Shep was the adult in his life who taught him how to shoot. His grandpas taught him how to play games, how to look for tells and count cards. His father taught him it was a fool’s game to work for a company so large you didn’t have a face or a name to those who held your life in their hands. His uncles taught him to get the fuck out of dodge before he got too old to forget the previous lesson.

Shep had taught him how to aim because July at the time had been a snot-nosed kid barely out of his teens who was quick on his feet. But there would always be someone quicker, he advised. There almost always was.

So one early morning, when the arena was quiet before the evening’s lineup of Lancer fights, Shep set up a few targets, handed July his own handgun and got to work.

Line Your Sight.

Breathe.

Fire.

Diamondback’s guns didn’t have a recoil he could feel – it was all automated, and when the Raleigh was fine-tuned the mechanized joints moved buttery smooth. Even as his fingers danced a nervous jitter on one button or another, inside all you’d generally feel was a little nudge, scarcely stronger than a shoulder tap. No real friction to it compared to a human body.

When he won his first license in a card game it was Shep’s idea to join the bouts. Why not have some fun, he’d said, Crowds love a scrappy underdog story. July took to it – the betting payout was always bigger than counting cards. The thrum of the machinery, the cheers, having to study his opponent to outwit them.

Fuck, how old was he then? Twenty-three? Twenty-five? Nah, maybe not that old. Still, shit.

The situation is getting worse, not better. More targets appear, Feren shouts something about Halcyon panicking that sets off his worst suspicions but they’re too high up and she’s in rodeo. ERIS births a star on the field that’s set to go nova – and maybe it did when his view of the world became a black void empty of everything.

No visuals, no outside sound. Just him, the glow of monitors and the duet of his own breathing with a recurrent beep warning him of heat levels. Did the Toku have one of these things? Or did JW just uninstall it. Either way they seem pointless in a Harrison, and in the Raleigh it’s drilling into his skull to take the place of everything. Minutes feel like hours, too long for him to sit with nothing. Nothing to line his sight, breathe, and fire at.

What are we doing? Trying not to die before we enter uncertain death, he thinks. He reaches for a cigarette he knows isn’t there; the pack sits empty in his pocket.

Before he can feel angry again the void recedes; he can see the newly made wasteland. Frames reduced to slag. The few who aren’t he helps eliminate until his weapons jam. More and more mechs crawl out of the woodwork and towards the hole in the wall. Nowhere left to go but inside.

Is this why he’s thinking on all this now? No light at the end of a tunnel, no life flashing before your eyes. Just ruminating on memories that come to mind, people who come to mind. Mercedes would make a good arena fighter, he thinks. Maybe Shep would pitch it to her when July was gone. Maybe he should have pitched it before now, in a time and place he’s pretty sure his slate doesn’t work correctly outside the immediate area.

None of his life lessons, from Shep or anyone else have prepped him for this. He wasn’t a man for metavaults, for the paracausal. There was already enough ‘-causal’ bullshit to deal with out there. That was still true. Nobody could have prepared him for this.

He didn’t know what to do when you gave too much of a shit the idea of being left behind chafes more than leaving. Of having to deliver bad news. Of surviving something alone, left to fend for himself. After all those insistences and overtures. Especially when they were the type to throw themselves into a fire. For what? A cause? A belief? A misplaced sense of duty? This wasn’t an adventure – if it was more people would respond to metavaults with excitement. At least his parents did it for something that made sense. At least they left him to fend for himself for something he could understand.

Instead he’s here. Outside a doorway to hell with a binary choice. Hang back and go it alone or throw himself after someone else. The seconds creep like hours. The thrum of the frame sounds like a heart against the metavault’s ribs.

17 notes

·

View notes

Note

Do you think streaming to prove that BTS is better than the rest as a go to solution is maybe counterproductive sometimes? I was thinking about it but none of the members own the rights to their music. Not even yoongi who wrote and produced under Agust D instead of Suga. Big Hit owns all the rights. Idk I get revenge streaming to make our members do well, especially against another group or artist or company but the issue at hand right now is that there was a paid smear campaign put against yoongi.

This is definitely just a conspiracy theory but someone had to fund out that money. I just remember when Jungkook had that pregnancy rumour happen, Bighit did nothing and army chose to stream to show support, keeping him at number 1. Now I don’t know anything about legal matters so maybe thats how it seemed on the outside but behind closed doors, shit was getting done. But again a hate campaign was put against a BTS member and Hybe is moving silently and army are choosing to chart all of Agust Ds discography and idk. I want his music to chart and get the appreciation it deserves but then I also think about how this company who was silent gets to gain more revenue from his songs charting. So yeah feel free to correct me if this is all bullshit. You just seem smart and observant.

Absolutely 💯

Hence my love hate Relationship with Hybe and Bang PD and corporations in general. They make resources available to these boys but the boys are also a resource to them. The more money and connections they invest into the boys the more money they make and the bigger their network of connections get.

The inverse is also true. However I feel at this stage in their careers they've automated so much and the boys have amassed enough social currency that it doesn't take much investment for them to turn a profit. So sometimes hybe just can't be bothered. Profits are made when you invest little and sell at a higher price.

It's a cash grab economy my dear. Companies are compromising quality and legacy to make a buck. Hybe unfortunately is no different.

When it comes to protecting their artists I feel it comes with the boys giving up greater autonomy in exchange for it. The relationship between hybe and the boys are determined by the content of their contract which is something I keep hammering on.

Hybe is not a charity nor are they parents to the boys. What they do for the boys is not free and the boys have to accept it or even consent to it to begin with. The relationship between them and the boys is pure business and the contract between them sets the LIMITS abd extent of the company's reach into their lives.

Think of it like this,

If hybe is to become BTS's attack dog, what will the boys be willing to do for hybe in exchange for that?? Will they pay hybe more?? And sometimes it's not even what they are willing to do, it's about what they are willing to allow or give up in exchange for such a protection.

A person who has signed off much of their autonomy would of course enjoy more privileges and protection from the company- I'm talking flying first class, staying in 1st class hotels, top notch body guards, assistants, a legal team and hit man on speed dial.

But that means hybe gets to control what they eat who they talk to who they sleep with where there go who they go with where they stay how much sleep they get and all that will cost you more % of their earnings.

Would you go for such a deal if you were in their shoes?

Or would you choose and pick what hybe has to offer in exchange for less control over your life while retaining a bigger percentage of your revenue?? In which case, you book your own flights, pay for your own accommodation when you travel and Sue when you are being attacked personally etc???

Their business relationship with hybe is evolving. The more successful they become the less control they would want hybe to have over them and the less hybe can meddle or intervene in their affairs.

They've literally gone from hybe questioning them over the slightest relationship rumor to saying such matters are their private lives, to now allowing them to sue in their personal capacity🙃

The boys are becoming autonomous over time and I think that's a good thing even if there's a down side to it.

I think hybe will fight for their interests as long as they remain their artists but that would depend on the resources they have and whether or not there is a contractual obligation or interest that needs to be protected.

As long as hybe is interested in them it will protect its interests including them.

I like reading contractual agreements for fun and I would do anything to get my hands on hybe's contract with BTS as a group and as solos.

It's okay to feel some type of way about the company but for now understand they have a mutually beneficial relationship and arrangement with the boys. They profit when the boys are successful but only the boys will suffer if they do not succeed so keep supporting the boys. Focus on their career and trust that they are constantly fighting for their own interests in that dynamic too.

I'm dozing off🥲

26 notes

·

View notes

Note

"chatgpt writing is bad because you can tell when it's chatgpt writing because chatgpt writing is bad". in reality the competent kids are using chatgpt well and the incompetent kids are using chatgpt poorly... like with any other tool.

It's not just like other tools. Calculators and computers and other kinds of automation don't require you to steal the hard work of other people who deserve recognition and compensation. I dont know why I have to keep reminding people of this.

It also uses an exorbitant amount of energy and water during an environmental crisis and it's been linked to declining cognitive skills. The competent kids are becoming less competent by using it and they're fucked when we require in-class essays.

Specifically, it can enhance your writing output and confidence but it decreases creativity, originality, critical thinking, reading comprehension, and makes you prone to data bias. Remember, AI privileges the most common answers, which are often out of date and wrong when it comes to scientific and sociological data. This results in reproduction of racism and sexist ideas, because guess whats common on the internet? Racism and sexism!

Heres a source (its a meta-analysis, so it aggregates data from a collection of studies. This means it has better statistical power than any single study, which could have been biased in a number of ways. Meta analysis= more data points, more data points= higher accuracy).

This study also considers positives of AI by the way, as noted it can increase writing efficiency but the downsides and ethical issues don't make that worthwhile in my opinion. We can and should enhance writing and confidence in other ways.

Heres another source:

The issue here is that if you rely on AI consistently, certain skills start to atrophy. So what happens when you can't use it?

Im not completely against all AI, there is legitimate possibility for ethical usage when its trained on paid for data sets and used for specific purpose. Ive seen good evidence for use in medical fields, and for enhancing language learning in certain ways. If we can find a way to reduce the energy and water consumption then cool.

But when you write essays with chatgpt you're just robbing yourself an opportunity to exercise valuable cognitive muscles and you're also robbing millions of people of the fruit of their own intellectual and creative property. Also like, on a purely aesthetic level it has such boring prose, it makes you sound exactly like everyone else and I actually appreciate a distinctive voice in a piece of writing.

It also often fails to cite ideas that belong to other people, which can get you an academic violation for plagiarism even if your writing isn't identified as AI. And by the way, AI detection software is only going to keep getting better in tandem with AI.

All that said it really doesn't matter to me how good it gets at faking human or how good people get at using it, I'm never going to support it because again, it requires mass scale intellectual theft and (at least currently) it involves an unnecessary energy expenditure. Like it's really not that complicated.

At the end of the day I would much rather know that I did my work. I feel pride in my writing because I know I chose every word, and because integrity matters to me.

This is the last post I'm making about this. If you send me another ask I'll block you and delete it. This space is meant to be fun for me and I don't want to engage in more bullshit discourse here.

15 notes

·

View notes

Text

i feel like a lot of the pedantry about generative/discriminative ai or machine learning or whave have you is somewhat diminished now because at this point AI generally either means "data science with bespoke neural networks" or "query LLM api" though i guess at some point people will solve the former problem with the latter if they havent already

nah actually the post is about that last part now. model architecture selection is mostly bullshit woo based on faulty pattern recognition that's 100 percent the sort of ill-posed heuristic problem that can and should be automated

6 notes

·

View notes